Research

Deception Cues and Robotic Agents

This was a small study that I conducted along with fellow student Wesley Miller, in which 25 participants were asked to rate three different agents - a human, a robot, and a text-based interface - in terms of their trustworthiness in reporting on the details of an external event. The results of the study indicate that participants were able to identify deception cues - traits that people exhibit when speaking untruthfully - the most successfully in interacting with the human agent. Additionally, the robotic agent was rated to be the most trustworthy of the agents involved, even in cases in which it reported events falsely.

Foreground Segmentation

For a final project in the Computer Vision course, I worked with another graduate student, Emma Turetsky, to implement an adaptive approach to background-foreground segmentation using a weighted clustering technique. The resulting program separates an incoming video feed into background and foreground layers in real time, marking the background region in black for demonstration purposes. This was designed to work even for a low-end video capture peripheral such as a webcam, and has been parallelized for efficiency on multi-core platforms.

19th Century Literature Genre Map

Based on raw texts and other domain-specific information provided by English student Catherine DeRose, I wrote a small set of scripts which output a parsed set of annotations giving location names, frequency of occurrence, map coordinates, and associated literary genres in JSON format. This file can then be loaded into a separate web-based utility that I created using the d3 and Polymaps JavaScript libraries, which overlays this data onto an interactive map. The end result is a map which shows locations from 55 different 19th century novels, filterable based on literary genre and otherwise capable of drilling down into the underlying data using the provided interface functionality.

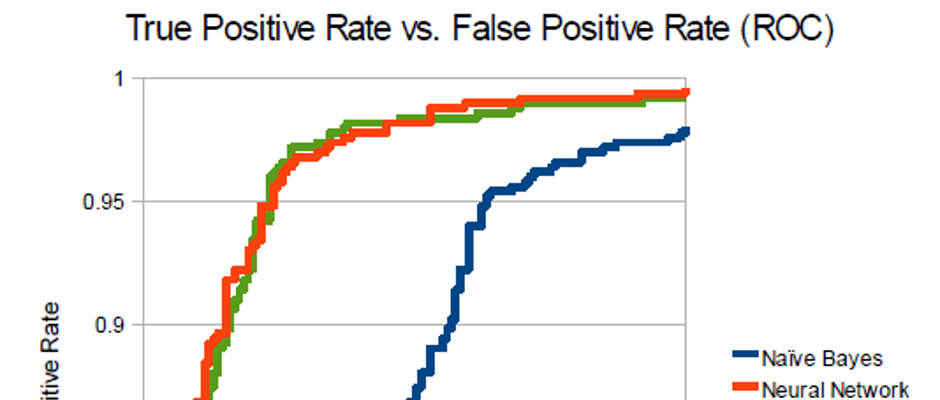

Spam Classification

This was a solo project meant to compare three machine learning implementations - a naive Bayes network, a logistic regressor, and a multilayer neural network - in the task of classifying input e-mail messages as either spam or ham (legitimate mail). This required the design and construction of each of these types of classifier as well as processing and formatting for a set of over 1000 e-mail messages for the systems to work with. The results showed that the naive Bayes approach was generally weaker than the other classifiers working on the unretouched data set, but did marginally better when the data was separated into 'early' and 'late' sets based on timestamp, suggesting that this classifier may be more resistant to changes in spam content over time as compared to the other two.